The multithreading preview for Crystal was just merged in yesterday so I’ve been playing around with it a bit to check its performance. Sure enough, I was able to saturate all 32 cores on a DigitalOcean droplet with 32.times { spawn { loop {} } }:

I wrote up a quick HTTP::Server app to check performance (I don’t know how to get a local build of Crystal to use shards or I’d check on a real app). Here’s the single-thread benchmark (via wrk, output trimmed):

Thread Stats Avg Stdev Max +/- Stdev

Latency 199.01us 90.71us 8.53ms 97.75%

Requests/sec: 50590.09

For comparison purposes, the release version of v0.30.1 gets 50013 reqs/sec with the same code on my machine. Still in the same ballpark, but it’s really heartening to know that the changes didn’t result in worse performance in single-thread mode. In multi-thread mode with a single thread, performance did drop a bit to 47457 — approximately a 5% reduction.

With the preview_mt flag enabled (crystal run -Dpreview_mt --release check_mt.cr) on Crystal master:

Thread Stats Avg Stdev Max +/- Stdev

Latency 93.54us 77.55us 3.36ms 96.54%

Requests/sec: 108131.26

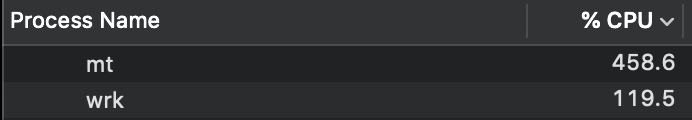

This is awesome, we get more throughput from a single process! Latency is lower! In fact, this is the first time I’ve been able to get wrk to consume more than 100% CPU before!

It’s not proportional, though, unfortunately. The first version of the app consumes 100% CPU and the second consumes 460%. But rather than a 4.6x improvement in throughput we only get 2.14x. Still a win, just not the one I was expecting. This isn’t intended as a criticism, just an observation that it’s probably not ready yet. :-)

If someone can drop some tips on how to get a locally built Crystal to compile with shards I’d be happy to run this against a real app instead of poorly simulated work.  I have a feeling that throughput might be a little more proportional when the request does real work since scheduling fibers will likely be a much smaller ratio of the total work being done. Getting the DB to keep up might be challenging, though.

I have a feeling that throughput might be a little more proportional when the request does real work since scheduling fibers will likely be a much smaller ratio of the total work being done. Getting the DB to keep up might be challenging, though.

In fact, new threads are never created after it begins executing your code. The scheduler spins up a thread pool during bootstrapping and new fibers are assigned to one of those threads.

In fact, new threads are never created after it begins executing your code. The scheduler spins up a thread pool during bootstrapping and new fibers are assigned to one of those threads.